Pi Pico SPI LCD Driver Using RAM Frame Buffer – ILI9341 and ST7789

12th April 2022

Great 10 Inch Raspberry Pi Display With 5 Point Multitouch

11th May 2022Multi Thread Coding on the Raspberry Pi Pico in Micropython

I’ve been using the Raspberry Pi Pico in a few of my projects and it’s very much taken over from the Arduino for me. The Pico is a powerful microcontroller board. Not only does it have 264KB of RAM and 2MB of Flash ROM but it also has two ARM Cortex-M0+ processor cores running at up to 133 MHz, which can even be overclocked up over 200MHz. This makes it orders of magnitude more powerful than the standard Arduino.

Add to that the ability to use MicroPython for development and the Pico becomes a fantastic platform for learning to build your own devices.

In this video it’s the dual core processor that I want to focus on. With our normal projects we end up running everything on a single core leaving half the processing power unused. Whilst not every bit of code can make use of two cores, many can reap real benefits from parallel processing.

With MicroPython running two threads is very easy as long as you plan out what you’re going to do.

So let me show you how it’s done.

Simple Two Core Coding

The basic idea of using two cores is quite simple.

If we can divide our code into two separate parts we can then run each part on its own core. The two halves can communicate with each other and synchronise actions.

MicroPython provides a _thread package to handle the division and running of our code on separate cores.

https://docs.micropython.org/en/latest/library/_thread.html

The _thread Multi-Threading Package

Note : At the time of recording this package is still in its development stage. It works, but there are a few issues that we’ll hit and have to work around. The big issue I found was that processing data on the second core seemed to generate a lot of temporary information that got left in RAM. The garbage collection system didn’t clear it quickly enough leading to a system crash. Explicitly running the garbage collection process as part of the code loop was the only workaround. Please see the SPI LCD example later to see this in action.

If you go to the MicroPython documentation you’ll find that it points you to the main CPython version of the package. Most of the features have been implemented to allow you low level control of the two threads running on the two processor cores.

To use the package we simply need to import it at the top of our code.

import _thread

Our main Python code will automatically start on core 0, but we can then tell the _thread package to start another block of code on core 1.

new_thread = _thread.start_new_thread(<thread_function>, args [, kwargs])

thread_function is a reference to a standard Python function that contains the code for the new thread. This must be followed by a tuple containing the function arguments and then an optional dictionary or keyword arguments for the function.

This method call will return a reference to the new thread which can be used for information and control.

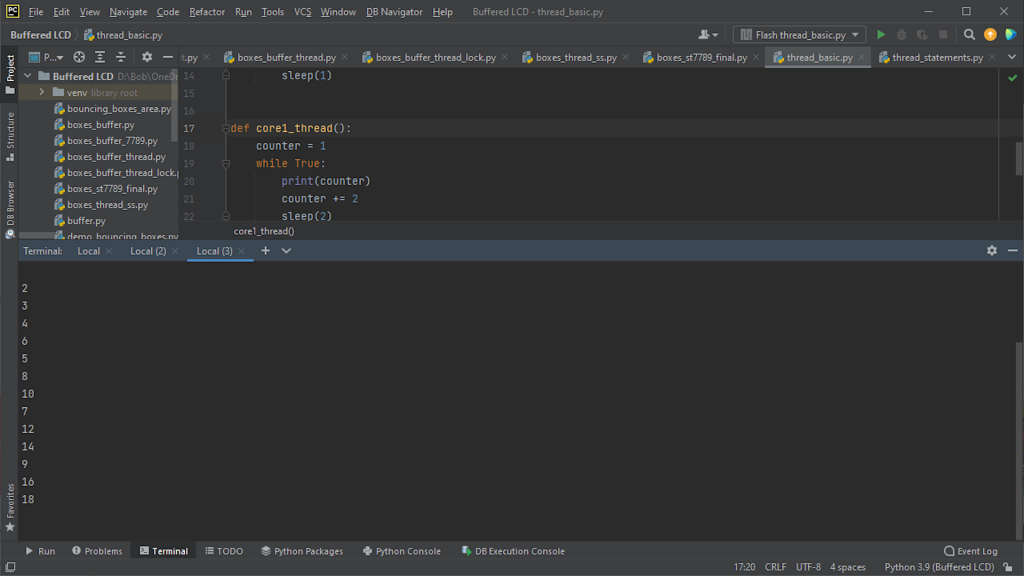

The easiest way to see this working is to create a very simply threaded example.

Multi-Threading Example

To get our code to work on two cores we need to break it up into two separate code sections.

For this example we’ll create one code loop that will print out an even number every 1 second to the console, and another that will print out an odd number every two seconds.

Each block of code needs to be wrapped in its own function that can be called from the main Python thread.

"""

Basic multi thread example

"""

from time import sleep

import _thread

def core0_thread():

counter = 0

while True:

print(counter)

counter += 2

sleep(1)

def core1_thread():

counter = 1

while True:

print(counter)

counter += 2

sleep(2)

second_thread = _thread.start_new_thread(core1_thread, ())

core0_thread()

The function called in the normal way will be run on the default core (0) and simply follow on in the main code flow. The one called using the _thread library will then be sent over to core 1 and run there.

So uploading this to the Pico and running the code through the REPL console will give us an output built from each of the threads.

And that’s the basics of multi-threaded coding in MicroPython on the Raspberry Pi Pico.

Communication Between Threads

So as you’ve seen getting two threads to work in parallel is really easy. Where things start to get a bit more complicated is when the two threads need to talk to each other and share resources.

Talking to eachother is fairly straightforward. Both threads share the global namespace in your code. So if you define a variable outside of any function or class it will available to both threads.

In this example we create a run_core_1 flag variable which the thread on core 0 uses to control the output from the core 1 thread.

"""

Using global variable for inter thread communication

multi thread example

"""

from time import sleep

import _thread

def core0_thread():

global run_core_1

counter = 0

while True:

# print next 5 even numbers

for loop in range(5):

print(counter)

counter += 2

sleep(1)

# signal core 1 to run

run_core_1 = True

# wait for core 1 to finish

print("core 0 waiting")

while run_core_1:

pass

def core1_thread():

global run_core_1

counter = 1

while True:

# wait for core 0 to signal start

print("core 1 waiting")

while not run_core_1:

pass

# print next 3 odd numbers

for loop in range(3):

print(counter)

counter += 2

sleep(0.5)

# signal core 0 code finished

run_core_1 = False

# Global variable to send signals between threads

run_core_1 = False

second_thread = _thread.start_new_thread(core1_thread, ())

core0_thread()

When the core 0 thread sets the flag to true, core 1 will detect the value and run its loop. When the flag gets reset to false core 1 will go into a waiting mode.

We could of course wrap this flag up in a class so that we could easily share the data across code files. Global variables are never a great idea, especially if you’re using multiple files as they clutter up your code and can be hard to maintain.

"""

Using Flag class for inter thread communication

multi thread example

"""

from time import sleep

import _thread

class Flag:

run_core_1 = False

@classmethod

def set_run_flag(cls):

cls.run_core_1 = True

@classmethod

def clear_run_flag(cls):

cls.run_core_1 = False

@classmethod

def get_run_flag(cls):

return cls.run_core_1

def core0_thread():

counter = 0

while True:

# print next 5 even numbers

for loop in range(5):

print(counter)

counter += 2

sleep(1)

# signal core 1 to run

Flag.set_run_flag()

# wait for core 1 to finish

print("core 0 waiting")

while Flag.get_run_flag():

pass

def core1_thread():

global run_core_1

counter = 1

while True:

# wait for core 0 to signal start

print("core 1 waiting")

while not Flag.get_run_flag():

pass

# print next 3 odd numbers

for loop in range(3):

print(counter)

counter += 2

sleep(0.5)

# signal core 0 code finished

Flag.clear_run_flag()

Flag.clear_run_flag()

second_thread = _thread.start_new_thread(core1_thread, ())

core0_thread()

Sharing Resources

Sometimes we need to be very careful about who and when a thread can have access to some data, or some resource, e.g. the SPI interface. If both threads try to use or update the same resource at the same time we’ll either get corrupted data or potentially crash part of our code.

There are a number of ways to achieve this control. One is to use the simple flag mechanism above and this will work for more basic, well defined situations. But when you need more flexible control you need to use a Lock.

A Lock (or semaphore in concurrent programming) allows us to control access to anything. We create a Lock object and only the owner of the Lock can use the resource. Every other thread has to wait for the Lock owner to release it before one of the waiting threads can take ownership and get access to the resource.

For example two threads need to use a single resource. If they access it at the same time the data will get corrupted. We create a lock and each thread can only access the resource when it acquires control of the lock. Initially the lock is open. The core 0 thread is then able to acquire the lock and start interacting with the resource. The core 1 thread is now blocked from using the resource. It can request access but will have to wait for core 0 to release the lock.

Once core 0 finishes it releases the lock and puts it back into the unlocked state. Noe the core 1 thread is able to acquire the lock and start its processing.

So that’s the principle of the lock. Let’s see a coded example.

In this example we have two threads that are writing continuously to the console, one character at a time. The console runs on a serial channel so there is only a single stream of characters that it can send at one time. If two threads are using it together we get a mix of the two messages coming over the serial output (you probably noticed a bit of this in the previous example where one thread could squeeze a character in between the end of a string and the newline character).

"""

Using global variable for inter thread communication

multi thread example

"""

from time import sleep

import _thread

def core0_thread():

while True:

print('C')

sleep(0.5)

print('O')

sleep(0.5)

print('R')

sleep(0.5)

print('E')

sleep(0.5)

print('0')

sleep(0.5)

def core1_thread():

while True:

print('c')

sleep(0.5)

print('o')

sleep(0.5)

print('r')

sleep(0.5)

print('e')

sleep(0.5)

print('1')

sleep(0.5)

second_thread = _thread.start_new_thread(core1_thread, ())

core0_thread()

If we put a Lock on the console we can stop this overlap.

"""

Using global variable for inter thread communication

multi thread example

"""

from time import sleep

import _thread

def core0_thread():

global lock

while True:

# try to acquire lock - wait if in use

lock.acquire()

print('C')

sleep(0.5)

print('O')

sleep(0.5)

print('R')

sleep(0.5)

print('E')

sleep(0.5)

print('0')

sleep(0.5)

# release lock

lock.release()

def core1_thread():

global lock

while True:

# try to acquire lock - wait if in use

lock.acquire()

print('c')

sleep(0.5)

print('o')

sleep(0.5)

print('r')

sleep(0.5)

print('e')

sleep(0.5)

print('1')

sleep(0.5)

# release lock

lock.release()

# create a global lock

lock = _thread.allocate_lock()

second_thread = _thread.start_new_thread(core1_thread, ())

core0_thread()

The Lock itself is a separate object. It doesn’t stop a thread from sending data to the console, we have to write our code so that it obeys the Lock.

Each thread asks for ownership of the Lock. Only one will get it.

The thread that doesn’t get the Lock will have to wait for its turn. Acquiring a lock with no parameters uses the default ‘wait until it’s free’ method, so your code will pause at this point.

The thread that does get ownership can then send its message. Once that’s complete it can then release the lock.

The thread that was waiting will not see that the lock is free and will take ownership so that it can process its message.

When the first owner tries to regain control of the lock it will see that it is in use and have to wait its turn.

Polling the Lock

The basic lock acquire method means that one of your threads will simply go into a waiting state until it gets hold of the lock. This might not be what you want to do. For example you might be collecting data and want to use the serial channel to send it. If you can’t use the channel you still need to continue gathering data and can simply try to send at a later time.

In this example we’ll set the core 0 thread to continuously poll the lock to see if it’s available. If not, it will continue processing by counting how many times it has polled the lock before getting ownership.

def core0_thread():

global lock

while True:

wait_counter = 0

# try to acquire lock but don't wait

while not lock.acquire(0): # lock.acquire(waitflag=1, timeout=-1)

# count the number of times the lock is polled

# Our code is not in a waiting state

# we are just polling the lock at the end of each loop iteration

wait_counter += 1

print("CORE 0 - I counted to " + str(wait_counter) + " while waiting")

print('C')

sleep(0.5)

print('O')

sleep(0.5)

print('R')

sleep(0.5)

print('E')

sleep(0.5)

print('0')

sleep(0.5)

# release lock

lock.release()

The acquire method call takes two positional parameters. The first is the Boolean waitflag. 0 means don’t wait. The second is the timeout value. This is the amount of time to wait in seconds before the method returns when set to wait.

If we run this version you’ll see that we don’t get the threads taking access to the console in turns. When core 0 tries to get control it checks but then goes of and does some more processing before coming back to check again. Core 1 uses the wait method so is primed to take control as soon as the lock is released. As soon as core 1 releases the lock it immediately tries to acquire it again. You can see that some times core 1 is able to complete, release and re acquire the lock while core 0 is updating its poll counter.

So do bear this in mind when running multiple threads that you need to make sure that one thread isn’t going to be greedy when it comes to taking control of resources.

So that’s the basics of concurrent coding using the _thread package. Now let’s see it in use in a real example.

SPI LCD Driver

This code is a development of my SPI LCD panel display driver. I’ll be covering the multi core version in more detail in a separate tutorial, but I want to use it as an example here to show how software can be broken up and the issues you might come across when using multiple cores.

Core 0, which is the default core our Micropython code runs on, updates the models in our code and then starts the rendering process, drawing objects onto the frame buffer memory space. Once this process finishes we need the SPI handling process to start drawing the frame to the LCD panel.

This frame buffer memory object is the resource that we need to control access to. Only one process can use it at any one time otherwise we end up with half drawn frames getting sent to the LCD panel.

Core 1 runs the SPI handler code which initially sits waiting for access to the frame buffer lock. Once it has that it assumes a frame has been prepared and the memory buffer needs to be sent to the LCD panel. So, once it acquires the lock controlling the buffer it sends the framebuffer to the LCD panel and when finished, releases the lock to allow the render thread access.

In our core 0 thread the update model code can run in parallel with the SPI handler so only the code where it renders objects onto the frame buffer memory needs to be controlled so it doesn’t clash with the other thread. This means that our code is able to use the slow SPI transfer time to complete the majority of our model processing.

Our code pauses at the start of the render process waiting for the lock to be acquired and then draws the objects into the buffer. It then releases the lock and lets the SPI handler on core 1 have control of the memory map.

Crash!

If we run this code we get an odd output. The boxes seem to run, but then freeze!

This did take me a while to work out what was wrong, but it seems to be a sort of memory leak in the threading system. As the new thread is processing it must be writing temporary data to the system heap RAM for local variables etc. This doesn’t appear to get cleaned fast enough by the garbage collection process.

To get it to work I needed to add an explicit garbage collection call at the end of the SPI handler loop.

import gc # import garbage collector library at top of code gc.collect() # run garbage collection

Again, this is an experimental package so I guess there are still a few bugs to iron out!

Code Races

With the garbage collection in place everything seems to run fine. But if we examine the code in a bit more detail we’ll see that there are some situations where we could either miss, or duplicate frames.

There are two code races in our code.

When our render code finishes it releases the lock. It then loops back to the update model code before again trying to acquire the lock for the next frame render. This code assumes the SPI handler will take control of the lock before the main thread has finished the update process. If it doesn’t, the main thread will simply re acquire the lock and process the next frame causing a skipped frame.

This situation is overcome by the fact that once the SPI handler releases the lock it immediately loops round and tries to acquire the lock, using the waiting technique. As soon as the render thread releases the lock the SPI thread will be waiting to take control.

However it does show the second race condition.

When the SPI thread acquires the lock and starts sending data to the LCD panel the main thread starts to process the model updates. If the model update process takes a long time the SPI thread will finish, release the lock and then try to re acquire it. Usually the render thread will be waiting to take control, but if the model updates haven’t finished the lock will be available for the SPI thread. It will simply re acquire the lock and resend the last frame out to the LCD giving us a duplicated frame which will create a delay before the next new frame gets sent.

The issue here is that neither thread knows what the other thread is doing. We could get around this by using flags to show thread states, etc.

Or we can create more control of the use of threads by rethinking our parallel processing which might also remove our need for explicit garbage collection.

Rethinking Our Threads

So far all our threads have run as infinite loops so that they are always active and always in parallel. This doesn’t have to be the case.

We can start, stop and restart threads whenever we want. A thread will exit if you perform a return from within the function that contains the thread code and you can then restart it using the normal start_new_thread method call.

In this version of the code we initially only have a single thread. When the core 0 thread finishes drawing a frame to the buffer memory it starts the SPI handler on the second core. It also sets a global flag variable to say that the frame is being rendered to the LCD.

def main_loop():

"""Test code."""

global fbuf, buffer, buffer_width, buffer_height

global render_frame

render_frame = False

try:

boxes = [Box(buffer_width - 1, buffer_height - 1, randint(7, 40),

color565(randint(30, 256), randint(30, 256), randint(30, 256))) for i in range(100)]

print(free(True))

start_time = ticks_us()

frame_count = 0

while True:

for b in boxes:

b.update_pos()

while render_frame:

# previous frame still rendering

pass

for b in boxes:

b.draw()

# render frame to lcd

render_frame = True

# start spi handler on core 1

spi_thread = _thread.start_new_thread(render_thread, (2,))

frame_count += 1

if frame_count == 100:

frame_rate = 100 / ((ticks_us() - start_time) / 1000000)

print(frame_rate)

start_time = ticks_us()

frame_count = 0

except KeyboardInterrupt:

pass

def render_thread(id):

global fbuf, buffer, buffer_width, buffer_height, render_frame, spi

global display, screen_width, screen_height, screen_rotation

# No need to wait for start signal as thread only started when buffer is ready

# render display

display.block(int((320 - buffer_width) / 2), int((240 - buffer_height) / 2),

int((320 - buffer_width) / 2) + buffer_width - 1,

int((240 - buffer_height) / 2) + buffer_height - 1, buffer)

# clear buffer

fbuf.fill(0)

# signal finished back to main thread

render_frame = False

# thread will exit and self clean removing need for garbage collection

return

The SPI render thread can start sending the frame data to the LCD immediately as it is only ever started when the frame buffer is free for use, so no lock is required. Once it has finished the LCD transfer it resets the rendering flag to let the main thread know it’s finished sending the frame and then simply returns to exit the thread.

When the thread exits it automatically clears up any heap data it’s left behind cleaning itself out of the Pico memory and is then ready for the next run request.

So this gives us our finished solution.

Conclusion

So that’s multi-threading on the Raspberry Pi Pico. Hopefully I’ve given you enough information to use this powerful technique in your own projects. As we’ve seen starting processes on the second core is not that difficult in MicroPython and with a little thought we can make sure that our processes play nicely together and can communicate and share data and resources.